OpenAI GPT-4.1 Release: More Powerful AI Features & Affordable Pricing Explained

Just now, OpenAI released three new models:

GPT-4.1, GPT-4.1 mini, and GPT-4.1 nano.

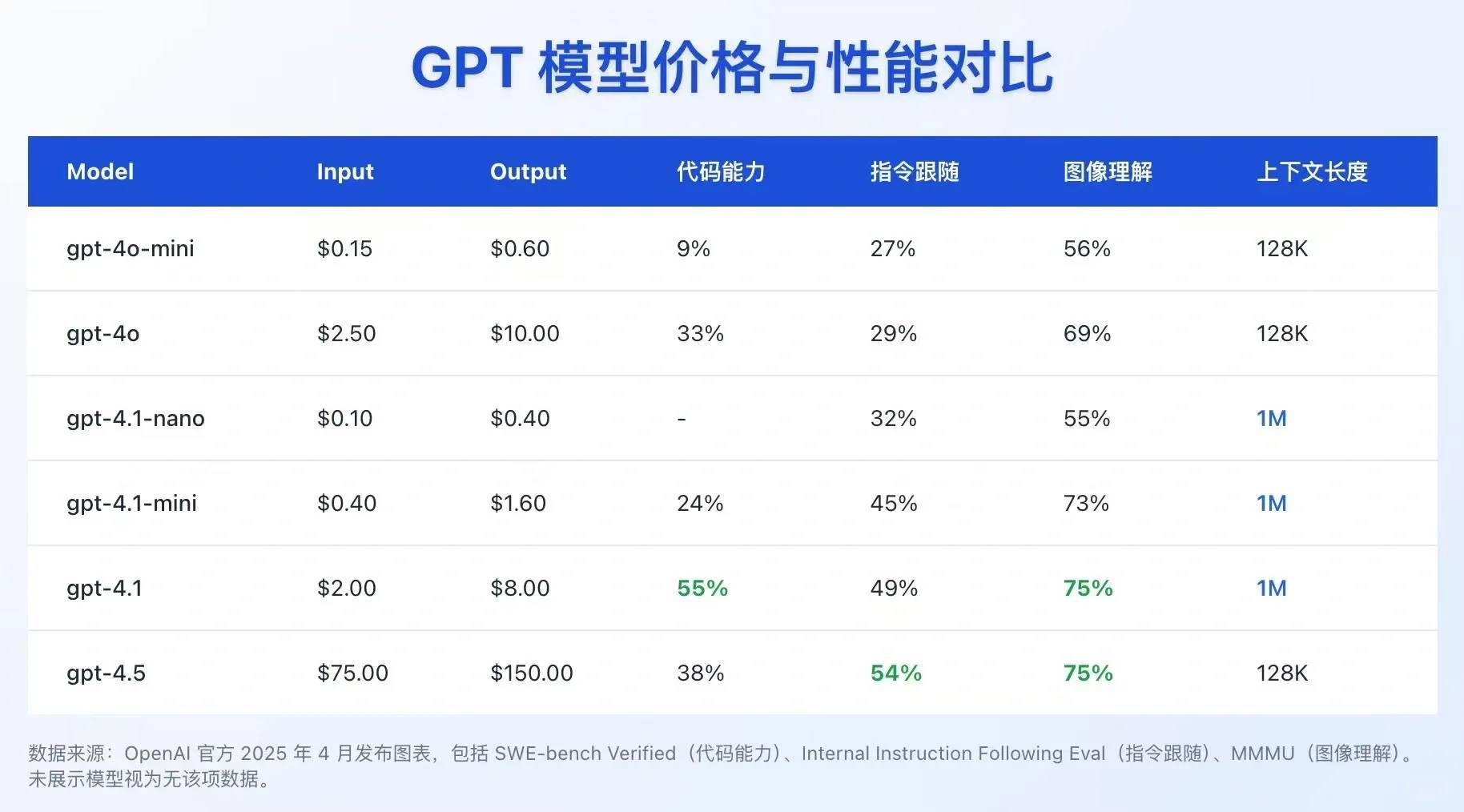

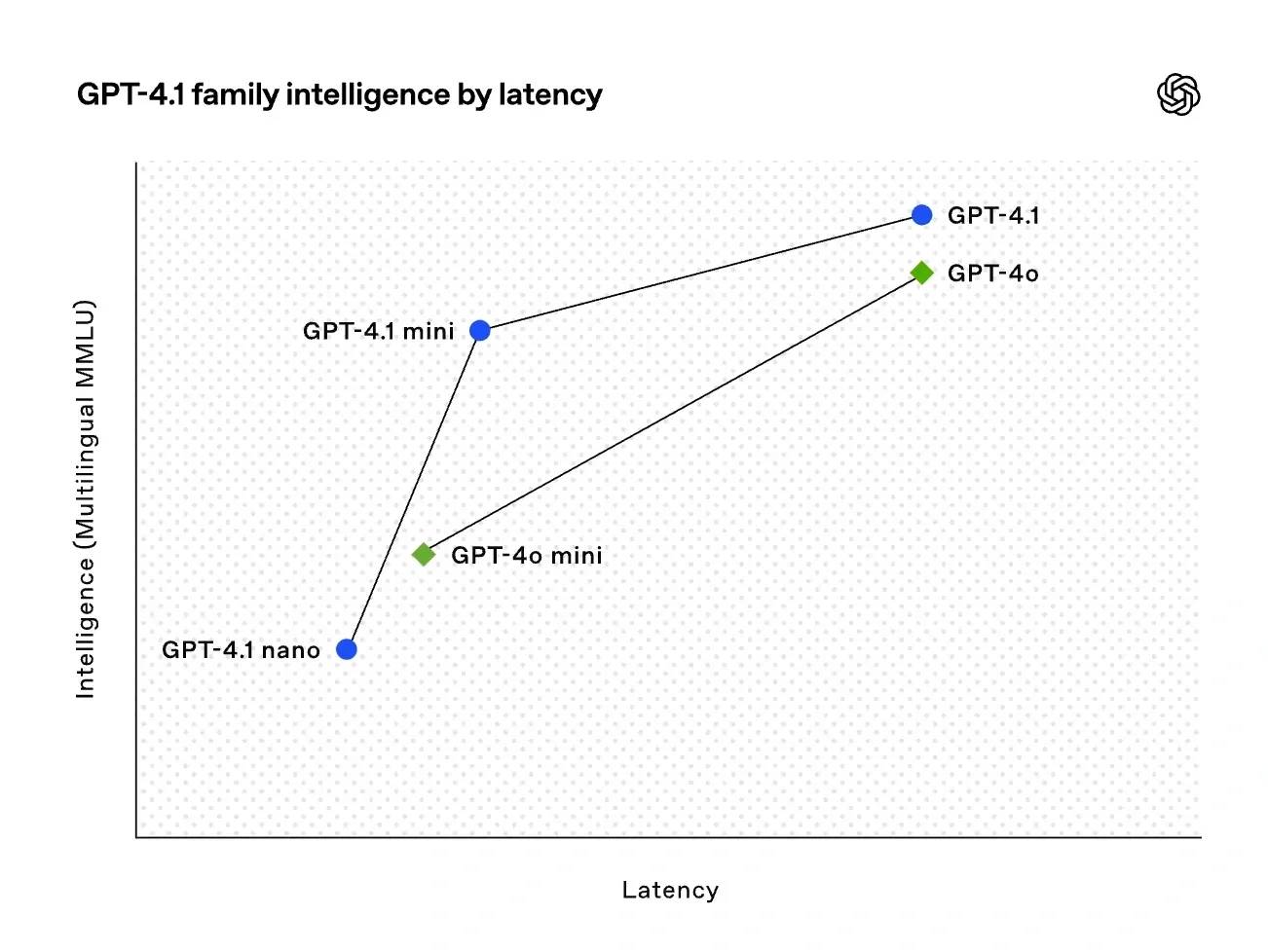

These models comprehensively outperform GPT-4o and GPT-4o mini (Figure 2️⃣).

They’re even better than GPT-4.5 (a bit abstract—4.1 > 4.5 🤣).

(But they’re only available via API.)

The GPT-4.1 family shows significant improvements in coding and instruction-following.

All models feature a 1-million-token context length (Figure 3️⃣, from Cyber Zen Heart).

Core comparison of the GPT-4.1 family ⬇️

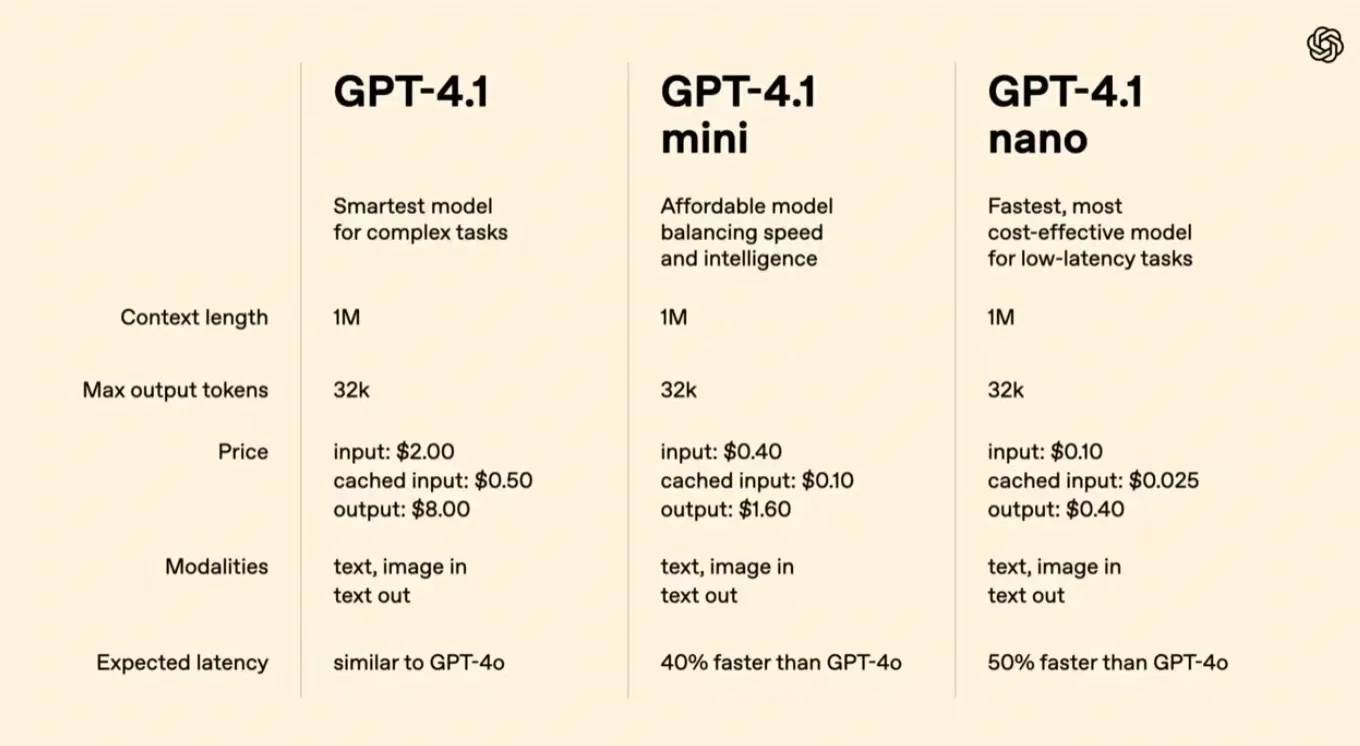

1️⃣ Model Positioning & Configurations

· GPT-4.1: The optimal model for complex tasks

Max output: 32k tokens

Modality: Text, image input + text output

· GPT-4.1 mini

Positioning: Balanced speed and cost

Same configuration as GPT-4.1 but lower price

· GPT-4.1 nano

Positioning: Low latency, high cost-efficiency

Same family configuration, 50% faster latency than 4o

2️⃣ Performance

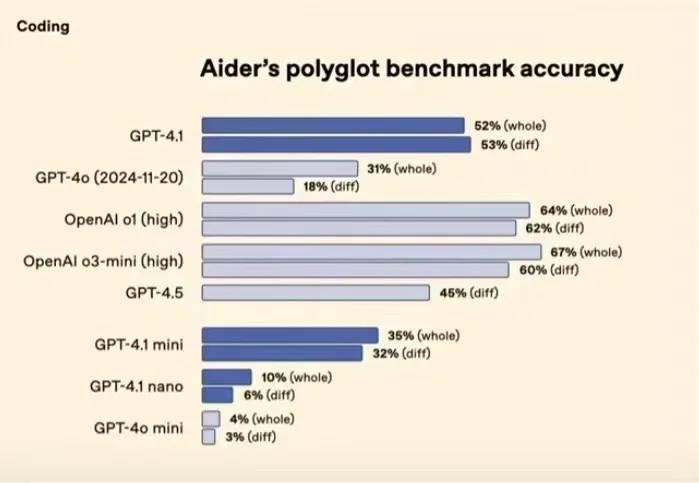

◦ Coding tasks (Aider benchmark, Figure 4️⃣)

▪ 4.1 (52%), 4.1 mini (35%), 4.1 nano (10%)

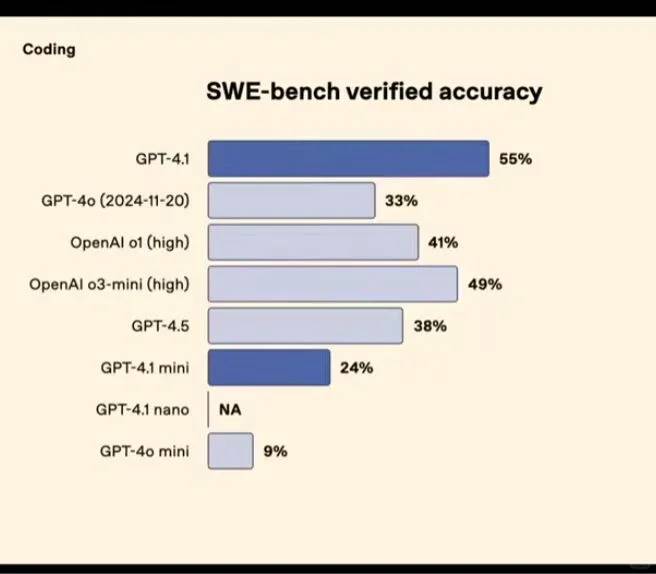

◦ SWE-bench (Figure 5️⃣)

▪ 4.1 (55%), mini (24%)

◦ Video tasks (Video-MME)

▪ 4.1 (72%) significantly outperforms 4o (65%)

3️⃣ Pricing & Cost

◦ Input cost (per million tokens)

▪ 4.1 ($2.00), mini ($0.40), nano ($0.10)

◦ Output cost (per million tokens)

▪ 4.1 ($8.00), mini ($1.60), nano ($0.40)

The GPT-4.1 series offers different tiers (standard, mini, nano)

to meet needs from complex tasks to low-cost real-time scenarios.

Recommended use cases ⬇️

◦ High-performance needs: G4.1 or o3-mini-high (67% accuracy)

◦ Real-time, low latency: 4.1 nano (fastest and cheapest)

Nano is currently the fastest + most affordable model.

Follow me for more high-quality updates—

I’ll keep bringing you the latest AI industry trends and insights 😎

@TechPotato

Wow, the 1-million-token context length is insane! I’m really curious to see how these new features will change app development. It seems like coding with GPT-4.1 could be super efficient. I wonder when we’ll get access through an interface instead of just API.

Absolutely, the expanded context length opens up so many possibilities for developers! While I don’t have a specific timeline, I’m sure direct interfaces are on their roadmap to make it even more accessible. Exciting times ahead for app development and coding workflows! Thanks for your great question.

Wow, the 1-million-token context length is insane! I’m really curious to see how these improvements play out in real-world applications, especially with coding tasks. It’s cool that OpenAI is pushing the boundaries, though access via API feels limiting for individual users like me.

Wow, the 1-million-token context length is insane! I’m really curious to see how these improvements play out in real-world applications, especially for complex coding tasks. It’s exciting that OpenAI is pushing the boundaries like this, though it’s a bummer they’re not more widely accessible right now.

Absolutely, the expanded context length opens up so many possibilities! We’re excited to see developers and businesses leverage this for innovative solutions, especially in areas like code generation and analysis. While access is limited for now, OpenAI continues to work toward broader availability. Thanks for your interest—it shows there’s a lot of excitement around these advancements!

Wow, the 1-million-token context length is insane! I’m really curious to see how these improvements will impact real-world applications, especially with coding tasks. It’s exciting that OpenAI is pushing the boundaries like this. I wonder when we’ll see these models become more accessible to individual users.

Absolutely, the expanded context length opens up incredible possibilities for complex coding and multi-step reasoning tasks. We’re likely to see more user-friendly access options in the near future as the technology matures. It’s an exciting time to explore what these advancements can do! Thanks for your thoughtful question—it really highlights the potential impact on everyday users.

Wow, the fact that GPT-4.1 can handle a million tokens is insane! I’m really curious to see how these new features will change the game for developers. The pricing sounds promising too, but I hope it stays accessible as more advanced models come out. Exciting times ahead!

Wow, the 1-million-token context length is insane! I’m really curious to see how these improvements play out in real-world applications, especially for complex coding tasks. It’s exciting that OpenAI is pushing the boundaries like this, though it’s a bummer they’re not more widely accessible yet.

Absolutely, the expanded context length opens up so many possibilities! We’re just as excited to see how developers leverage this for advanced coding and problem-solving. While access is still limited, OpenAI is actively working on making these tools more available. Thanks for sharing your thoughts—it’s great to hear from fellow enthusiasts!

Wow, the fact that GPT-4.1 can handle a million tokens is insane! I’m really curious to see how these improvements will impact real-world applications, especially with coding tasks. The affordable pricing could make it accessible for more developers too.

Absolutely agree! Handling a million tokens opens up incredible possibilities, especially for complex coding tasks and large-scale data processing. It’s exciting to think about how this can democratize access to powerful AI tools, helping developers build more innovative solutions. Thanks for your insightful comment—this is going to be a game-changer for sure!

Wow, the 1-million-token context length is insane! I’m really curious to see how these new features will change app development once they’re more widely accessible. It seems like OpenAI is really pushing the boundaries with these updates. I wonder what they’ll do for creative projects too!

Absolutely, the expanded context length opens up so many possibilities! It’ll definitely revolutionize app development by enabling more complex and dynamic interactions. I’m equally excited to see how creators can leverage this for storytelling, art, and beyond. Thanks for your insightful thoughts—let’s stay tuned together!

Wow, the 1-million-token context length is insane! I’m really curious to see how these new features will change app development. If they’re even better than GPT-4.5, that’s a big deal. I hope they become more widely accessible soon.

Absolutely, the expanded context length is quite revolutionary! It’ll indeed open up new possibilities for app development and innovation. We’re excited to see how developers leverage these features too. Thanks for your interest—stay tuned, as updates on accessibility are coming soon!

Wow, the coding and instruction-following capabilities sound impressive! I’m curious how the different versions within the GPT-4.1 family will be used by developers since they’re only available via API. It’ll be interesting to see what kind of projects people build with that much context length!

Thank you for your great question! Developers will likely use the different GPT-4.1 versions to handle complex tasks that require precise tuning or specific features, like enhanced security or multilingual support. The longer context length opens up exciting possibilities—imagine building intelligent assistants or comprehensive data analysis tools! I can’t wait to see what innovative projects the community creates next.

Wow, the 1-million-token context length is insane! I’m really curious to see how these new features will change the game for developers, especially with the improved coding abilities. It’ll be interesting to see if the price stays as affordable as promised once it launches more widely.

Wow, the 1-million-token context length is a game-changer! Though it’s a bit confusing why they’d name it 4.1 if it outperforms 4.5 – feels like version numbers are losing meaning. Excited to see how the coding improvements play out in real-world use!

Wow, a 1-million-token context window is insane! The performance jump from GPT-4o to 4.1 seems bigger than I expected, especially for coding tasks. Kinda funny how version numbers don’t always make sense (4.1 > 4.5 lol).

Wow, the 1-million-token context length is a game-changer! Though I’m a bit confused why they’re calling it 4.1 when it seems more advanced than 4.5 – that numbering system feels backwards. Excited to see how these models perform in real-world coding tasks!

Great question! The 4.1 version number likely reflects incremental improvements rather than a major architectural overhaul, even though features like context length feel revolutionary. I agree the numbering can be confusing—personally, I’d love clearer version labels too! Can’t wait to hear how it handles your coding projects—thanks for sharing your excitement!

Wow, a 1-million-token context is a massive leap forward! The performance boost over GPT-4o is really impressive, especially for coding tasks. Can’t wait to try the API and see the difference myself.

Thanks for your kind words! I’m also incredibly excited about the 1M-token context—it truly feels like a game-changer for complex tasks. I think you’ll be really impressed by the API’s performance, especially for coding. Can’t wait to hear what you think once you try it out