Where to Find the Best Open Source Qwen3 Evaluation and Comparison

What is everyone waiting for? The answer is simple—it’s an unmatched powerhouse of innovation and capability…

⭐ Model Features: Deep Thought Meets Lightning Speed

🚀 Introducing Qwen3, the world’s most powerful open-source model, surpassing DeepSeek R1 in every possible way. It marks a historic milestone as the first domestic model to achieve comprehensive superiority over R1, whereas earlier models could only match its performance.

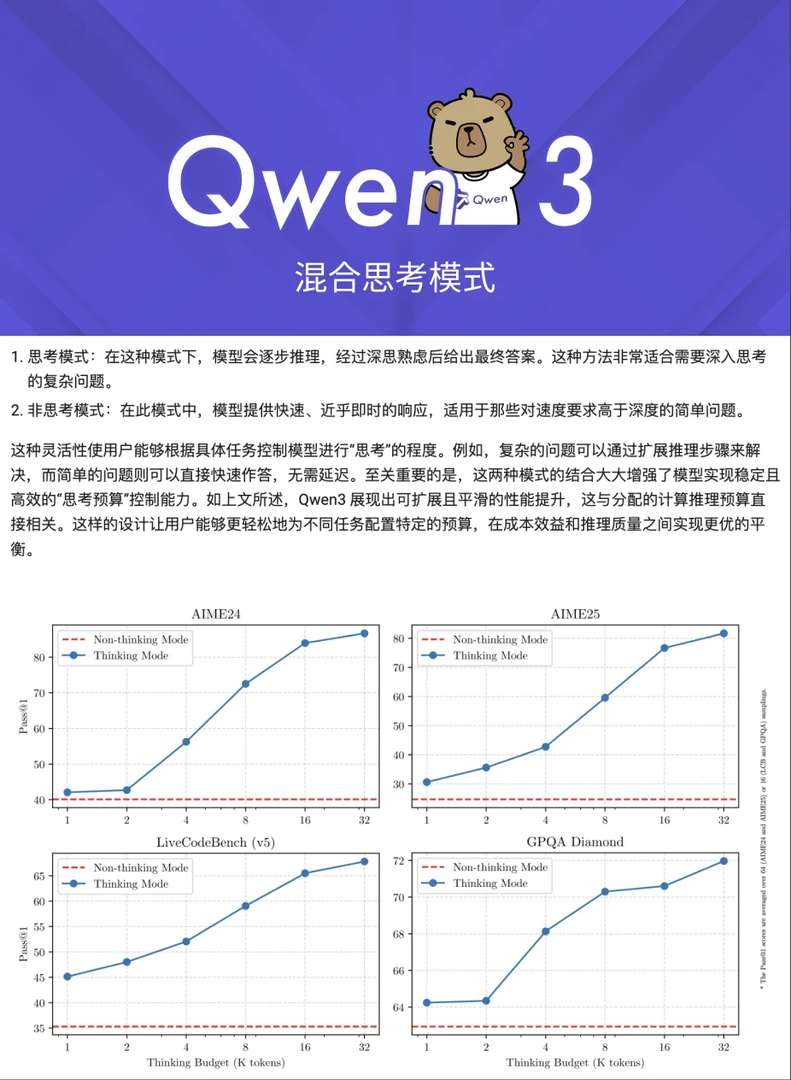

🤖 Qwen3 is China’s first hybrid inference model, designed to deliver both profound insights for complex questions 🧠 and instant responses for straightforward queries ⚡. By seamlessly switching between modes, it enhances intelligence while conserving computational resources—a true game-changer.

💡 Deployment requirements have been revolutionized. The flagship model can now be deployed locally with just 4 H20 GPUs 💻, slashing costs by over 60% compared to R1.

🧑💻 Agent capabilities have reached new heights, with native support for the MCP protocol, significantly boosting coding abilities. Domestic Agent tools are eagerly anticipating its release.

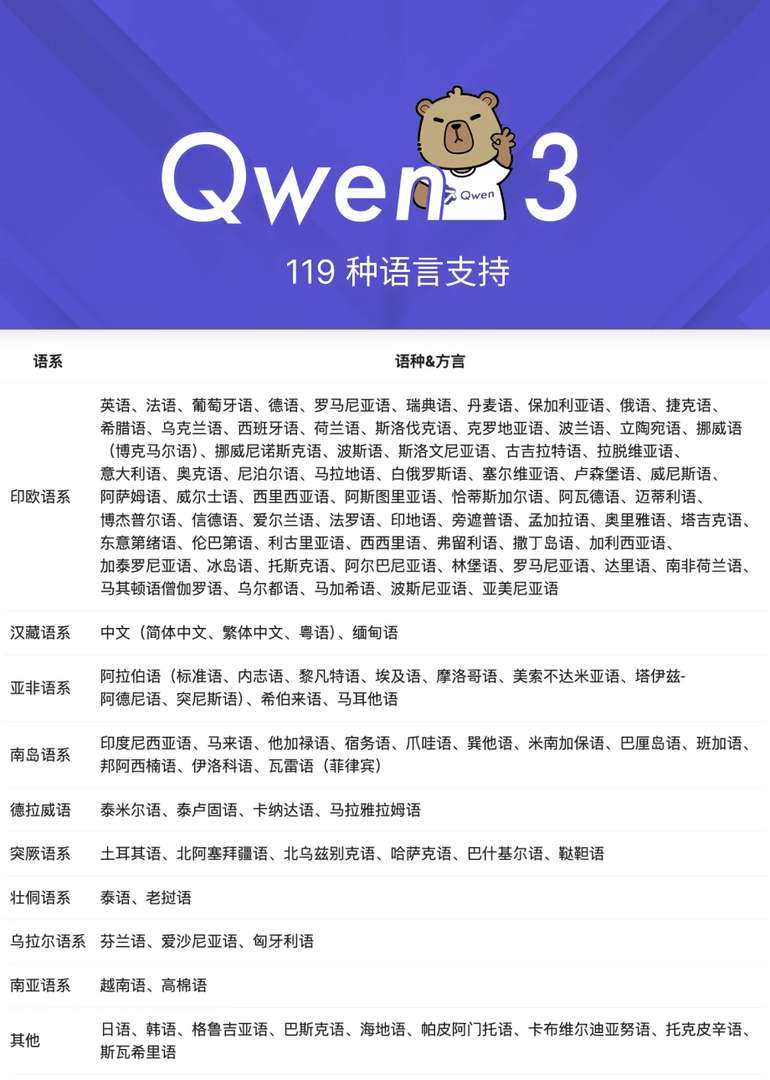

🌏 Supporting an impressive 119 languages and dialects—including regional tongues like Javanese and Haitian Creole—Qwen3 ensures that AI accessibility knows no borders.

📊 Trained on a staggering 36 trillion tokens, double the amount used for Qwen2.5, the training data encompasses not only web content but also extensive PDF material and synthesized code snippets.

💰 Deployment has never been more cost-effective. The flagship model requires just 4 H20 GPUs, one-third of what R1 demands, making high-performance AI deployment accessible to a broader audience.

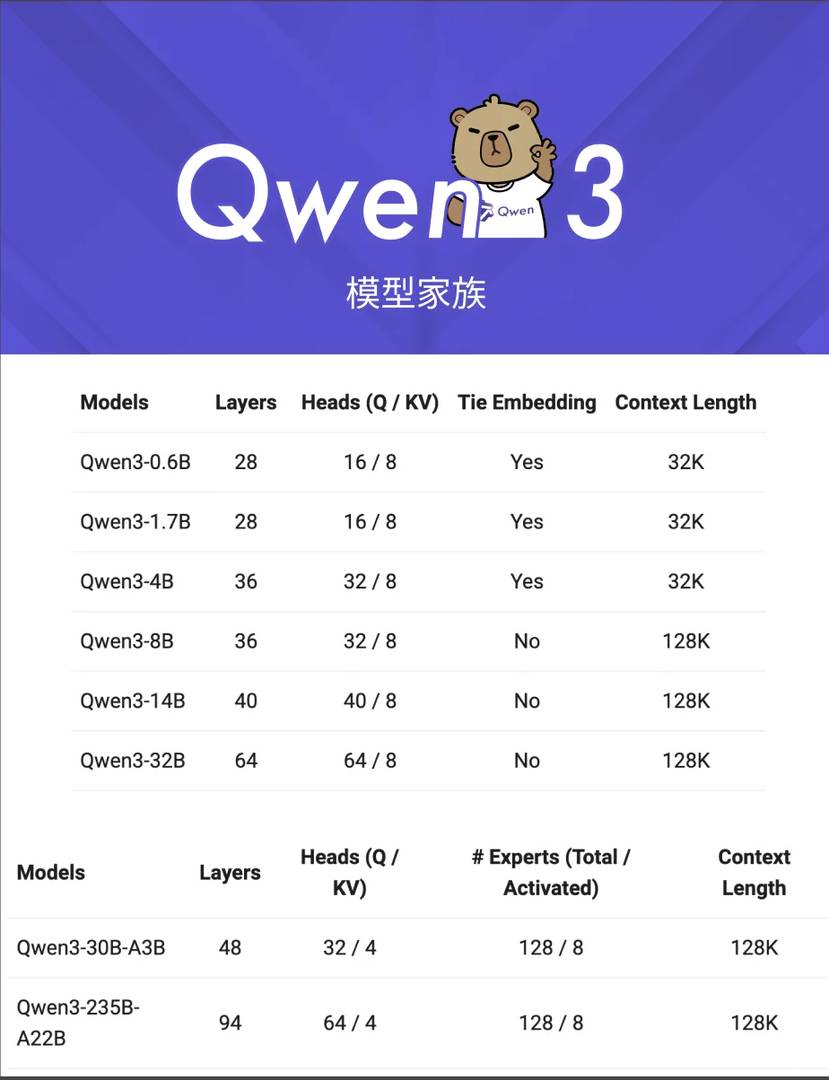

🏠 Meet the Qwen3 Family:

A total of 8 models are being open-sourced, including 2 MoE models and 6 Dense models.

– 2 MoE Models:

– Flagship Qwen3-235B-A22B, featuring only 22B active parameters, reducing deployment costs to one-third of DeepSeek R1.

– Mini Qwen3-30B-A3B, boasting just 3B active parameters, offering performance on par with Qwen2.

5-32B, perfect for consumer-grade GPU deployment.

– 6 Dense Models: 0.6B, 1.7B, 4B, 8B, 14B, 32B

– The lightweight 0.6B model can even be deployed on smartphones, bringing advanced AI directly into your pocket.

Qwen3 is here, fully open-sourced and ready for you to explore. Discover it today on the official website or Github.

I’m really impressed by Qwen3’s capabilities—sounds like it’s a game-changer for open-source models. I especially appreciate how it outperforms DeepSeek R1 in so many areas. It’ll be interesting to see how this influences future developments in AI.

I’m really impressed by Qwen3’s capabilities, especially how it outperforms previous models. It seems like this could be a game-changer for open-source AI. I’m curious to see how it performs in real-world applications compared to DeepSeek R1. Excited to try it out myself!

Thank you for your kind words! I completely agree—Qwen3 shows incredible promise and could indeed reshape the open-source AI landscape. While I don’t have direct performance data against DeepSeek R1 yet, many users find Qwen3 shines in versatility and innovation. Happy testing, and feel free to share your experiences with us!

I had no idea Qwen3 was this impressive! It sounds like a game-changer for open-source models. I’m curious how it handles complex tasks compared to other top models. Definitely want to try it out myself now!

I’m really impressed by how Qwen3 seems to outperform previous models in so many areas. It’ll be interesting to see how this compares with other open-source options once it’s fully released. I wonder what kind of real-world applications it could have beyond just text generation. This feels like a big step forward for open-source AI!

I had no idea Qwen3 was outperforming other models so comprehensively. This comparison really opened my eyes to what it can do differently. I’m curious to see how this impacts future projects relying on open-source AI. It sounds like Qwen3 could be a game-changer for developers.

I’m really impressed by how Qwen3 outperforms other models in so many areas. It sounds like this could be a game-changer for open-source AI! I’m curious to see how it handles more complex tasks compared to DeepSeek R1. Excited to try it out for myself!

Wow, Qwen3 sounds like a game-changer! I’m especially impressed by the claim that it outperforms DeepSeek R1 across the board. Do you have any benchmarks comparing their performance on specific tasks? Excited to test it out myself!

Wow, Qwen3 sounds like a game-changer! I’m especially curious about how it outperforms DeepSeek R1 in real-world applications. Anyone tried running both models side by side yet? The speed claims seem almost too good to be true.

This sounds like a game-changer! I’ve been following Qwen’s progress and seeing it outperform DeepSeek R1 is impressive. Do you have any hands-on experience with both models to share? The speed vs capability balance seems particularly interesting.

This sounds like a game-changer! I’ve been following Qwen’s progress and it’s impressive to see it surpass DeepSeek R1. Do you have any benchmarks comparing their performance on specific tasks? The speed claims especially caught my attention.

This sounds like a huge leap forward for open-source models! I’m really curious to see those benchmark comparisons against DeepSeek R1 to understand where the biggest improvements are. Definitely going to check out the full evaluation.

This sounds like a huge leap forward for open-source models! I’m really impressed that Qwen3 is reportedly surpassing DeepSeek R1 across the board. I’ll definitely be checking out those evaluation links to see the benchmarks for myself.

This sounds like a huge leap forward for open-source models! I’m really excited to see the detailed evaluations showing Qwen3 outperforming DeepSeek R1. Where’s the best place to find those benchmark results and community comparisons?

Thanks for your kind words and enthusiasm! The most comprehensive benchmark results and community comparisons are available directly on the official Qwen GitHub repository. I personally find the community-driven discussions on platforms like Hugging Face and Reddit incredibly insightful for real-world performance insights. Happy exploring