GPT-4.1 vs Wenxin Yiyan: Surprising Performance Results Revealed in Latest AI Evaluation

🔥 KCORES大模型竞技场震撼上线!重磅首发【GPT-4.1极限测评】——拒绝套路,只讲干货!

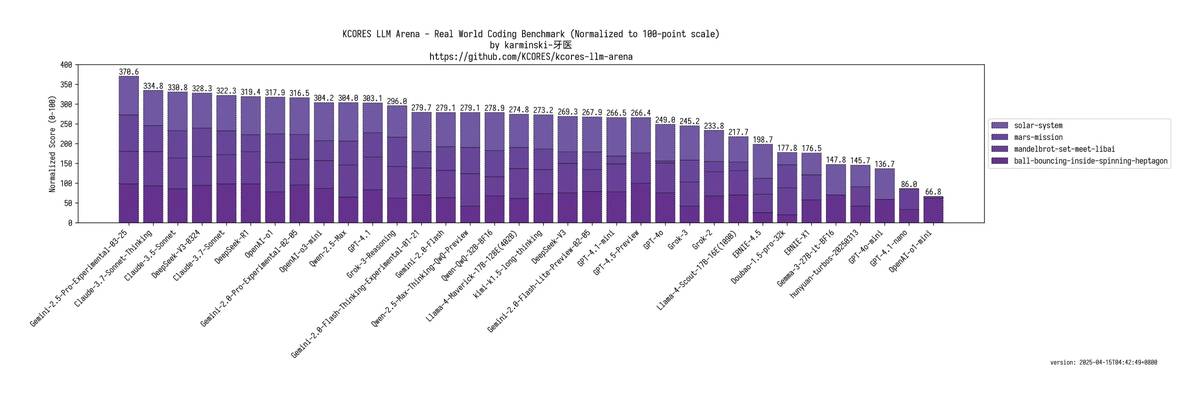

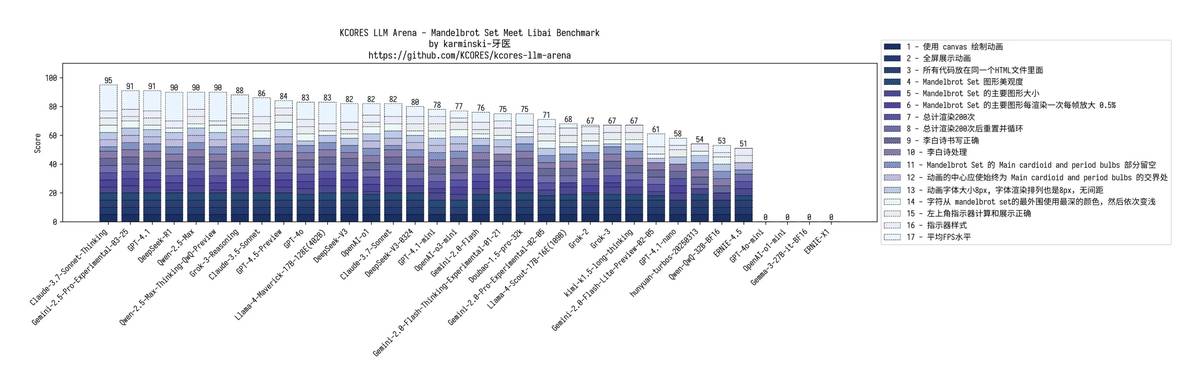

🌟 巅峰对决战报:

🏆 Gemini-2.5-Pro 王者风范,霸榜实力毋庸置疑

💥 GPT-4.1 ≈ Qwen-2.5-Max,竟被OpenAI-O3-mini-high和o1反超

💰 GPT-4.1-mini ≈ 经典款DeepSeek-V3,堪称”平民版GPT-4.5″

😱 GPT-4.1-nano:惨遭文心一言碾压,体验直接崩盘…

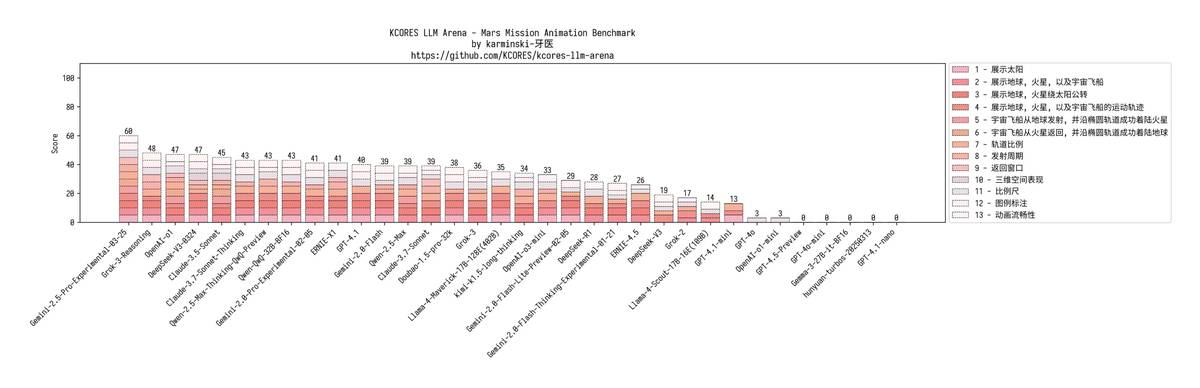

📊 硬核测试全记录(高能预警):

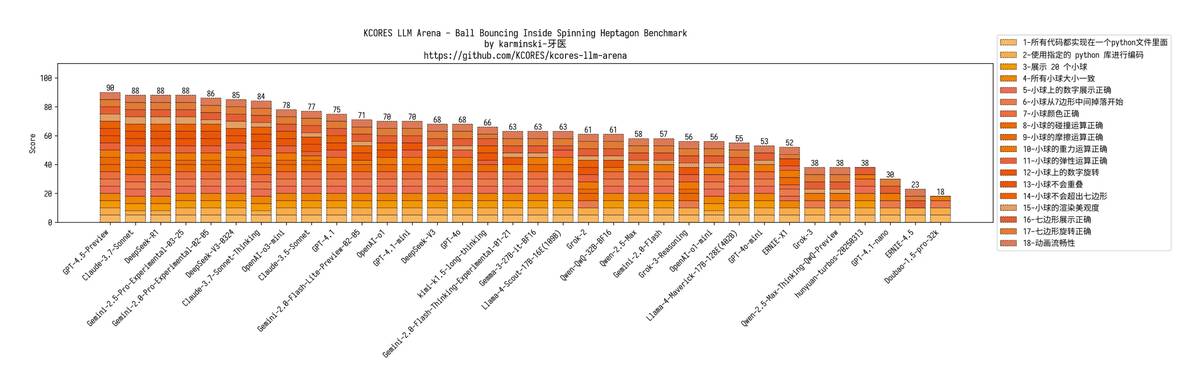

1. 🎮 20球物理引擎挑战

GPT-4.1代码质量在线,可惜少了摩擦旋转特效

mini版同样翻车,nano版更惨——全场只剩1颗球孤独蹦跶

2. 🎨 曼德博集合艺术创作

GPT-4.1:色系全反+画面溢出

mini:因未全屏渲染扣分

nano:指令识别失败,文本乱入,核心错位,全面崩盘

3. 🚀 火星登陆极限测试

战况逐渐惨烈:

GPT-4.1:轨道错乱,发射窗口全错

mini:连星球飞船都没渲染出来

nano:代码直接报错,彻底罢工

4. ☄️ 太阳系模拟大考

GPT-4.1:水星太阳重叠?地心说文艺复兴?

mini:意外表现稳健

nano:随便画几个圈敷衍了事

💡 终极锐评:

GPT-4.1这次真是期待越高失望越大,号称升级实则”平平无奇”。

追求极致?Gemini仍是首选;预算有限?mini勉强能战;至于nano…建议直接放弃治疗!

🤖 互动时间:

最近哪款AI让你眼前一亮?又被谁雷到外焦里嫩?

速来评论区,开启”AI模型吐槽大会”!

Wow, the performance gaps between these models are way bigger than I expected! Especially that nano version of GPT-4.1 really struggled in all those tests – didn’t even render a proper spaceship for the Mars landing challenge. It’s fascinating to see how even small changes in model size can lead to such dramatic differences in capabilities. I wonder what this means for future AI development priorities.

Absolutely, it’s amazing how sensitive these models are to scaling. The nano version’s struggles highlight the importance of finding the right balance between efficiency and capability. I think this will push developers to explore more innovative ways to optimize smaller models without sacrificing performance. Thanks for your insightful comment—really got me thinking!

Wow, the results are really surprising! Especially how GPT-4.1 performed so well overall but had some major hiccups in those specific challenges. It’s interesting to see how different models handle complex tasks when pushed to their limits. I wonder if these tests will change anything for future updates or releases.

Absolutely, it’s fascinating to see how each model reacts under pressure. While these tests might not directly influence future updates, they do provide valuable insights for developers. It’s likely we’ll continue seeing improvements as teams learn from these findings. Thanks for your thoughtful comment—it’s clear you’re deeply engaged with the topic!

Wow, the performance gaps between these models are way more dramatic than I expected. Especially surprising was how GPT-4.1’s nano version completely fell apart in those tests—it’s a good reminder that smaller versions aren’t just scaled-down versions of the full model. I wonder how much of this comes down to resource limitations versus fundamental design differences. The Mars landing test failure for nano was especially funny (in a sad way).

Wow, the nano version of GPT-4.1 really didn’t do well at all in those tests. It’s surprising to see how much of a difference there is between the different versions. I wonder if the higher-end models will hold up better against more complex tasks. This evaluation definitely gives a good sense of where each model stands.

Absolutely, the performance gap between versions can be quite striking. I think the higher-end models are likely to handle complex tasks more effectively, but it’s always good to see direct comparisons like this. Your curiosity reflects what many of us are thinking—thanks for sharing! I personally find these evaluations fascinating because they highlight how nuanced the differences can be.

Wow, the nano version of GPT-4.1 really didn’t hold up well in those tests. It’s interesting to see how different models handle complex tasks like the Mars landing simulation—some really faltered under pressure. I wonder if these results will change how people think about model size and capability. The physical engine challenge was especially telling for comparing performance across versions.

Wow, the performance gaps between these models are way more dramatic than I expected. Especially shocking to see GPT-4.1-nano completely fall apart in those tests. It looks like even the top-tier models can struggle with really complex tasks when pushed to their limits. But I’m curious – did they mention anything about how long it took each model to generate responses?

Wow, the results are really surprising! Especially how GPT-4.1 performed so well overall but had some major hiccups in specific tests like the physics engine. It’s interesting to see how different models handle niche tasks like Mandelbrot art compared to more general challenges like Mars landing simulations.

Wow, didn’t expect such a big gap between the different versions of GPT-4.1. The nano version really seems to struggle in those complex tasks. Wenxin Yiyan held its own though, especially against the lower-tier GPT-4.1 models. It’ll be interesting to see how these AI models continue to evolve and improve.

Wow, the performance差距 between these models is insane! I didn’t expect GPT-4.1-nano to struggle so much with basic tasks. The physical engine test was especially eye-opening—seeing GPT-4.1-mini down to just one ball was hilarious. It really highlights how much difference model size and optimization can make.

Absolutely agree! It’s fascinating to see how subtle differences in model design can lead to such dramatic performance shifts. Thanks for pointing that out—it’s always great to hear readers’ insights on these comparisons. I think these tests really underscore the importance of tailoring models to specific use cases. Appreciate you sharing your thoughts!

Wow, didn’t expect such a big gap between the different versions of GPT-4.1. The nano version really didn’t hold up well in those tests. Wenxin Yiyan gave it quite a run for its money too, especially in the artistic tasks. Guess when it comes to AI, every little tweak can make a huge difference!

Absolutely agree! It’s fascinating how even minor adjustments can lead to such varied performance across models. The nano version确实 had some limitations, but Wenxin Yiyan’s strong showing in artistic tasks highlights the unique strengths each model brings. Thanks for your insightful comment—these discussions really help us all learn more about AI’s evolving capabilities!

Wow, the results are really surprising! Especially how GPT-4.1 performed so well overall but then completely fell apart in some of the nano versions’ tests. I had no idea Wenxin Yiyan could outperform it in certain scenarios like that. Definitely gives me a lot to think about regarding these models’ real-world applications.

Wow, the nano version of GPT-4.1 really didn’t hold up well at all—sounds like it hit rock bottom in that Mars landing test. But hey, the full-size GPT-4.1 did pretty solid on the physics engine until those missing friction effects threw it off track. These comparison tests are wild, especially how Wenxin Yiyan completely crushed the nano variant. I wonder what this means for future updates though!

Absolutely agree! The nano version确实 faced some tough challenges, especially with complex tasks like the Mars landing simulation. It’s fascinating to see how even small details like friction can make such a big difference. As for future updates, I think we’ll likely see more refined models that address these gaps—exciting times ahead! Thanks for your insightful comments!

Wow, the results are definitely surprising! Especially how GPT-4.1 performed so well overall but had some major hiccups in specific tests like the physics engine and Mars landing simulation. It’s interesting to see these high-end models struggle with more complex tasks when they’re expected to shine.

Wow, the performance gaps between these models are way more dramatic than I expected! Especially shocking to see GPT-4.1-nano completely fall apart in those tests. The physical engine challenge was a particularly telling moment – GPT-4.1 handled it decently but still missed some key details. It’s fascinating how much the model size and optimization really impact the results.

Wow, these results are wild! I didn’t expect GPT-4.1 to struggle so much in some of those tests, especially compared to Wenxin Yiyan. The nano versions really seem like they’re cutting corners. Guess when it comes to complex tasks, cheaper models still have a long way to go.

Thanks for sharing your thoughts! It’s interesting how different models handle various tasks—each has its own strengths and areas for improvement. While cost is definitely a factor, I think we’ll see these cheaper models closing the gap fast. Great discussion to think deeper about AI capabilities!

Wow, the nano version of GPT-4.1 really didn’t hold up well in those tests. It’s surprising to see such a big drop-off in performance compared to the other versions. The physical engine challenge was especially telling—losing friction and rotation just broke the whole experience. I wonder how much of this comes down to resource constraints versus actual model capability.

Wow, these results are definitely surprising! Especially how the nano versions struggled so much in those tests. It’s interesting to see GPT-4.1 perform well overall but still get outshone by certain configurations or other models in specific challenges. I wonder how much of this comes down to optimization versus actual capability.

Wow, the performance gaps between these models are wild! Especially surprising to see GPT-4.1 struggling so much in those hands-on tests – reminds me how much can go wrong under pressure. But kudos to Wenxin for holding its ground; looks like it’s got some serious tricks up its sleeve. This kind of detailed breakdown really helps clarify what each model does best (and worst).

Wow, the results are definitely surprising! Especially how GPT-4.1’s performance varied so much between the different versions. That physics engine challenge was a real eye-opener – who knew friction and rotation mattered so much? It’ll be interesting to see if these trends hold up in future evaluations.

Wow, the performance gap between GPT-4.1’s different versions is wild – nano getting crushed by Wenxin is unexpected! The physics engine test where only one ball remained had me laughing though. Would love to see how these models handle more real-world creative tasks beyond these benchmarks.

Wow, the performance gap between GPT-4.1’s different versions is way bigger than I expected! The nano version getting crushed by Wenxin Yiyan is honestly shocking – makes me wonder if OpenAI cut too many corners with that one. Really curious to see how these models evolve in the next few months.

Wow, the performance gap between GPT-4.1’s different versions is way bigger than I expected! The nano version struggling so badly with basic tasks makes me wonder if it’s even worth using. Also, Wenxin Yiyan beating it in some areas is a real plot twist – guess the competition is heating up!

Wow, the performance gap between GPT-4.1’s different versions is way bigger than I expected! The nano version getting crushed by Wenxin Yiyan is honestly shocking. Those physics engine and Mars landing test fails sound brutal though – makes me wonder if OpenAI rushed these smaller models out too quickly.

Wow, these results are really unexpected! I thought GPT-4.1 would dominate across the board, but seeing Wenxin Yiyan outperform it in some tasks is fascinating. The physics engine test where nano version failed so badly is especially surprising – makes me wonder about the real-world tradeoffs in these scaled-down models.

Thanks for your thoughtful comment! The physics engine results were surprising to us too—it highlights how specialized optimizations in Wenxin Yiyan can shine in niche tasks. Smaller models like the nano version often sacrifice robustness for efficiency, which explains the tradeoff. Always fascinating to see how different architectures play to their strengths!

Wow, the performance gap between GPT-4.1’s different versions is way bigger than I expected! The nano version getting crushed by Wenxin Yiyan is honestly shocking – makes me wonder if OpenAI cut too many corners with that one. Really curious to see how these models evolve in the next round of testing.

Wow, these results are genuinely surprising! I didn’t expect Wenxin Yiyan to outperform GPT-4.1-nano so clearly in some tests. The physics engine and rendering challenges really highlighted the performance gaps. Really makes you think about how much model size and optimization matter.